Where does your base layer need to be?

The first in seriesThe foundation of any enterprise in the 21st century is its data center infrastructure – what we refer to as an enterprise’s “base layer.” The location of an enterprise’s base layer determines latency, power costs, power profile, data center operating and capital efficiency, and a host of other performance metrics. In this series, we will discuss each of these different performance metrics, to help those who are responsible for data center performance and value impact frame the major decisions they must make about their infrastructure.

A primer on latency

Latency, which is measured in milliseconds, is the time that it takes for this data to travel from its source to destination (one-way latency). Often, latency is referred to in terms of round trip, meaning from when the request originated to the time when response is received.

Latency has an impact on application performance and therefore customer experience and enterprise value, but, of course, comes at a price. Understanding your latency requirements and how edge computing may help with them, is a must for every organization.

How low must latency be?

It depends. Downloads of on-demand movies take 2 or 5 seconds to start. The only thing that matters, which depends on bandwidth, is that each movie starts playing in, say, less than 30 seconds. Remote surgery or driverless cars, instead, can have smaller bandwidth needs…but for them, even a 100-millisecond extra delay in getting information is literally a matter of life or death. The obvious conclusion is that there cannot be one single minimum value of “acceptable latency.” Currently, latency under 100ms is considered reasonable and acceptable in many cases. Ultra-low latency, not exceeding 10ms, is required only for certain workloads.

When it comes to latency, finding if an application will not just benefit from edge computing, but may in fact demand it to function at all, may be much easier: just think telemedicine, multiplayer online gaming, automatic analysis of CCTV images or IoT sensors, and many upcoming 5G services. Highly interactive applications like these require real-time computing and ultra-low latency – through base layer located close to eyeballs at the edge.

Understanding application requirements – the place to start

We think one of the best pieces that we have come across that shows how to begin to address the issue of where your base layer needs to be is a 2017 report by 451 Research entitled “Datacenters at the Edge.”

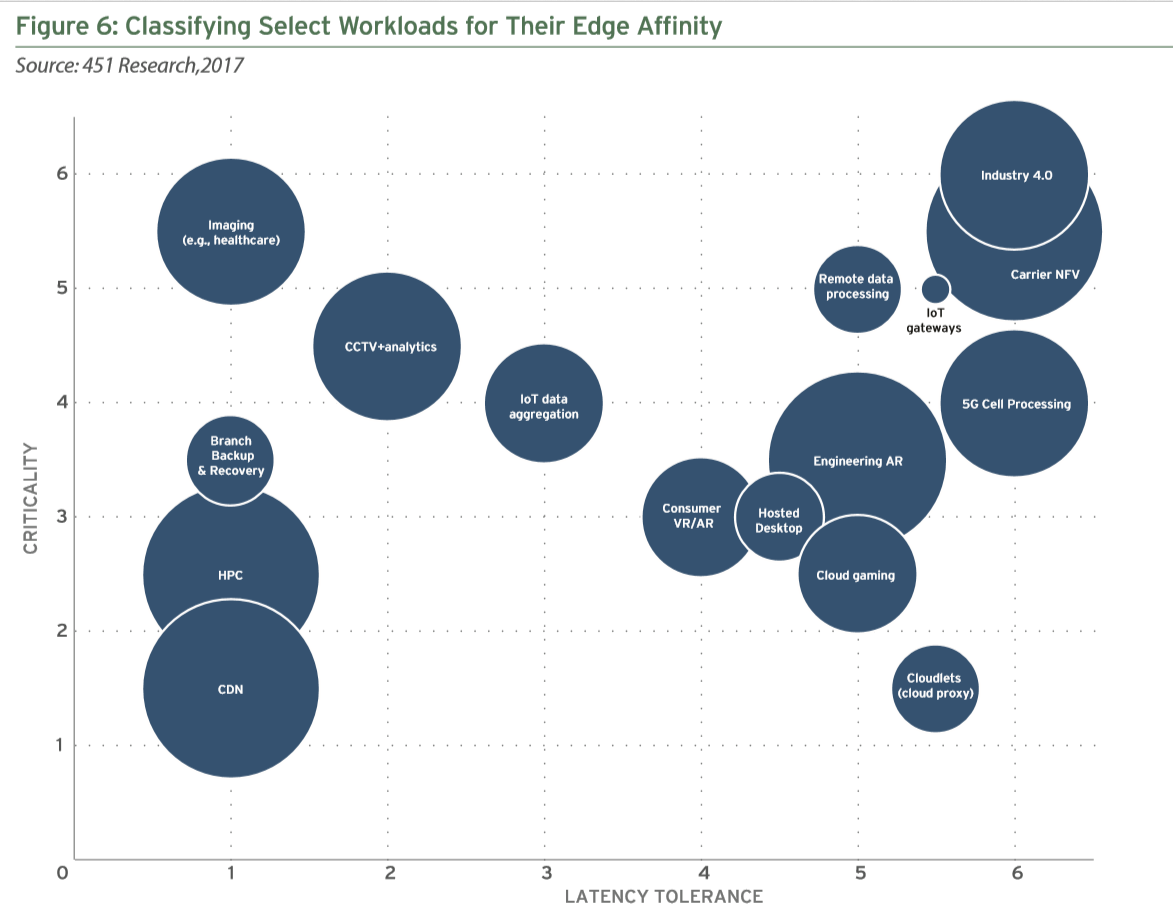

Author Rhonda Ascierto states that “there are three main technical factors affecting whether processing/storage should be at the edge or at a more central location: latency, volume of data created or needed locally and criticality (sensitivity to failure or delays).” She then develops a framework for scoring different workloads on these factors and portrays the results in this simple bubble chart/matrix.

Criticality of workload, data throughput, latency tolerance are the three drivers of an application’s position in the chart above. High-Performance Computing applications (HPC) and high-volume Content Delivery Networks (CDN) require limiting storage and bandwidth costs and will mostly require edge infrastructure. Workloads such as IoT gateways, Remote Data Processing, Data Archives can easily utilize core data centers as they are not as critical and can tolerate higher latency.

Once these metrics are thoroughly understood throughout the entire applications portfolio, then the work of dollarizing benefits and costs associated with optimizing the hosting locations of the apps can begin.